The past decade has really been something else for artificial intelligence. We’ve witnessed some incredible innovations, especially transformer models taking center stage. They started out focused on natural language processing, tools like Google’s BERT and OpenAI’s GPT models. But here’s the kicker: they’re not just sticking to language models anymore. These transformers are now diving in the world of computer vision, doing things we once thought were totally out of reach.

We’re really seeing a shift in the world of image recognition. Remember when Convolutional Neural Networks (CNNs) were the go-to? Well, those days are pretty much behind us. Now, transformers are stepping into the spotlight. They’re not just good; they’re way better at extracting features, adapting to different tasks, and scaling up for larger applications. This makes them very exciting for everything from self-driving cars to medical imaging.

But, you might be wondering, how do they manage to outshine the old-school methods?

In this article, we’re going to dive into how transformer models are changing the game in computer vision. We’ll look at what they can do, where they’re being used, and what the future might have in store for this fascinating evolution in AI. So, let’s get into it!

Understanding Transformers in AI

1. From NLP to Computer Vision

Back in 2017, Transformers really shook the world with this groundbreaking paper titled “Attention Is All You Need.” It introduced a fresh way of building neural networks that focuses on attention mechanisms to handle data. This was a game changer in natural language processing, leading to some incredible models like BERT, which are awesome at grasping context in text.

Then, fast-forward to 2020, researchers decided to take this whole idea and adapt it for computer vision. That’s how Vision Transformers, or ViTs, came into play. So, what do they do? Well, they slice images into smaller patches and treat these patches like words in a sentence. This means the model can take in the full image all at once, instead of piecemeal. This shift from working with words to working with images has really kicked off a new chapter in deep learning for computer vision.

2. Why Transformers Are Game-Changers

Transformers really shine in the world of computer vision, and there are a few key reasons for that.

- Scalability: These models are ideal for tackling intricate vision tasks because they can handle massive datasets and complex models with ease.

- Global Context: Unlike CNNs, which tend to zoom in on specific parts of an image, transformers step back and see the whole picture. They observe relationships across the entire image, which can make a big difference in understanding what’s going on.

- Versatility: It is another big plus. Whether you’re classifying images or detecting objects, the same transformer architecture can do it all.

- Top Performance: Vision Transformers (ViTs) often hit it out of the park, achieving top-notch results on benchmarks like ImageNet, especially when they’ve been pre-trained on a large scale.

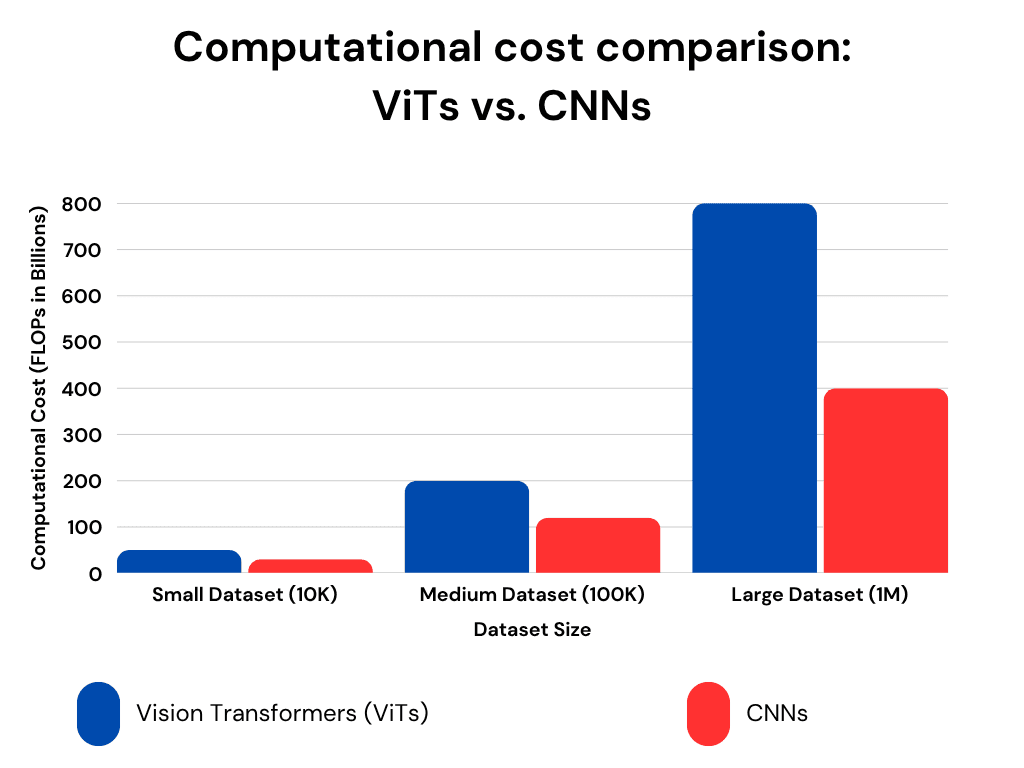

So, these advantages definitely make transformers a powerful tool in computer vision. But, you know, there’s always a catch. Their computational needs can raise some concerns, especially when we talk about using them in settings where resources are tight. It’s a balancing act, for sure!

How Transformers Are Transforming Computer Vision

Transformers are really shaking things up in various fields. It’s fascinating to see how they’re changing the landscape. Let’s dive into some of the ways they’re making an impact:

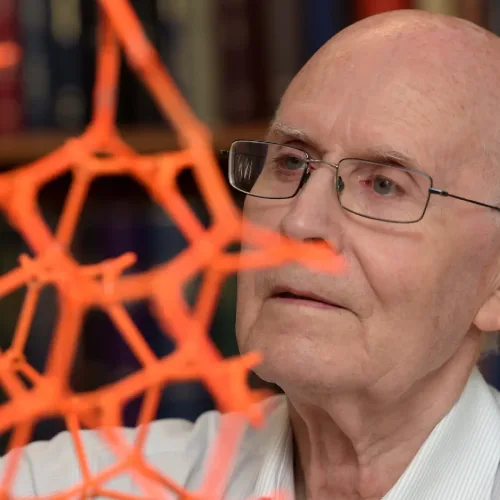

1. Medical Imaging

- Transformers improve tumor detection, X-ray analysis, and MRI scan treatment.

- Their ability to analyze entire images simultaneously results in higher accuracy compared to CNNs.

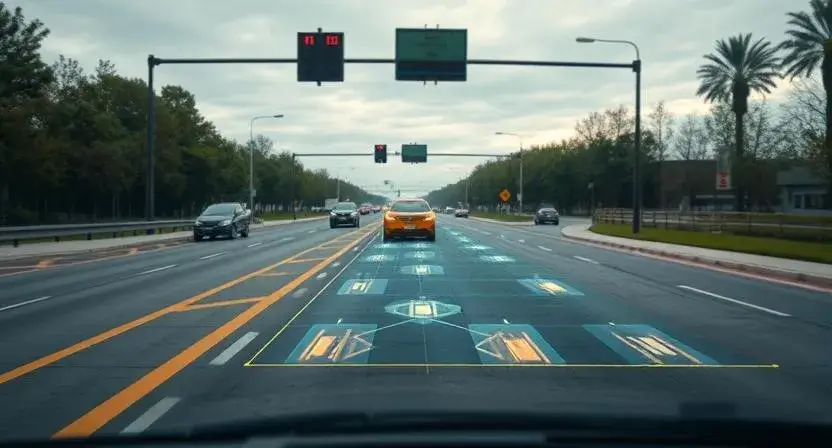

2. Autonomous Vehicles

- In self-driving cars, transformers enhance object recognition and path prediction.

- Companies like Tesla and Waymo are incorporating vision transformers for safer navigation.

3. AI-Powered Image Generation

- Tools like DALL-E and Midjourney use transformers to create hyperrealistic AI-generated art.

- This technology is revolutionizing creative industries, from marketing to movie production.

4. Security & Surveillance

- AI-powered facial recognition and video analytics benefit from transformers’ superior feature detection.

- Law enforcement and cybersecurity firms use this tech to identify threats and anomalies efficiently.

CNN vs. Transformers in Computer Vision

| Feature | CNNs | Transformers |

|---|---|---|

| Processing Style | Local feature detection | Global attention mechanism |

| Image Analysis Speed | Slower (pixel-by-pixel) | Faster (entire image at once) |

| Adaptability | Limited by predefined filters | Learns patterns dynamically |

| Computational Cost | Lower | Higher (requires more power) |

| Accuracy in Complex Images | Moderate | Higher (captures context better) |

Transformers are taking AI-driven vision to a whole new level. It’s pretty amazing how they’re making real-time, large-scale image analysis not just more accurate, but also way more adaptable. It feels like we’re just scratching the surface of what’s possible!

Real-World Applications of Transformers in Computer Vision

1. Autonomous Vehicles and Object Detection

Transformers are really shaking up the world of self-driving cars, especially with models like the DEtection TRansformer, or DETR for short. This one was brought to life by the folks at Facebook AI Research. What’s cool about DETR is how it makes object detection a lot simpler, it directly predicts bounding boxes and labels, which is a big step up from the old-school methods. In fact, it’s been shown to outperform those traditional techniques on the COCO dataset.

So, why does this matter? It’s all about safety. By improving real-time detection of things like pedestrians, other vehicles, and random obstacles, it really boosts the safety of autonomous cars. And here’s something interesting: companies like Tesla are digging into these transformer-based AI vision systems too. It just goes to show how much of an impact this technology is having in the industry.

2. Medical Imaging and Diagnostics

In the world of healthcare, transformers are revolutionizing things when it comes to medical imaging. Those Vision Transformers (ViTs) are quite impressive, they can take a good look at X-rays, MRIs, and CT scans and analyze them with remarkable accuracy. This is a game-changer, especially for spotting diseases early on, like pneumonia or even cancer.

And some studies from 2025 are saying that these transformers might actually be able to do certain tasks just as well as, or even better than, radiologists. How cool is that? It’s all due to their knack for processing really complex and varied data.

So, what does this mean for patients? Well, it could mean quicker and more reliable diagnoses, which is pretty important for improving treatments. It’s exciting to think about how technology is helping us make healthcare better!

Challenges and Future of Transformers in Computer Vision

Even though transformer models are pretty amazing at what they do, they still run into some bumps when it comes to computer vision. Let’s dive into a few of the main issues:

1. Computational Power & Cost

Transformers need a ton of computing power to handle big datasets effectively. You see, unlike the old-school CNNs that look at images one at a time, transformers tackle entire images all at once. Pretty cool, right? But that also means they take up a lot of memory, which can be quite the headache.

To make it work, you really need some fancy hardware like GPUs or TPUs, and yeah, that can drive up costs for companies and researchers alike. And as more folks jump on the AI bandwagon, it’s becoming super clear that there’s a big demand for smarter architectures. We need solutions that can cut down on the computational load but still keep things accurate.

2. Interpretability & Trust Issues

Transformer models get a lot of flak for being like black boxes. It’s tough to figure out how they make their decisions. Unlike CNNs, which have a pretty clear way of pulling out features, transformers use these complicated attention mechanisms. It can be really hard to wrap your head around how they come up with their predictions.

And this is a big deal in fields like healthcare and finance. I mean, when AI is making decisions that affect people’s lives or money, we really need to understand what’s going on behind the scenes. If we can’t, it just leads to trust issues. Although, researchers are on it! They’re working on explainable AI techniques to help make these models more understandable and boost user confidence.

3. Ethical Concerns & AI Bias

There’s been a lot of talk lately about how AI is increasingly being used in security and surveillance. But with that, we’ve also got to face some serious ethical concerns, especially when it comes to those transformer-based models. I mean, think about it: when these models are used for things like facial recognition or automated decision-making, they can unintentionally boost the biases that are already in the training data. This can lead to some pretty unfair results, which is definitely concerning.

And don’t even get me started on deepfakes and misinformation. They’re getting smarter all the time, right? It really makes you wonder about how we’re going to use AI responsibly. To tackle these issues, regulators are stepping up, proposing ethical frameworks for AI to make sure that when we implement these transformer-based systems, they’re fair and unbiased.

While these challenges are real, there’s a lot of ongoing research in AI working to solve them. We’re seeing the development of hybrid AI models and better interpretability techniques, plus ethical AI policies that aim to make these transformer-based vision systems more accessible, reliable, and scalable as we move forward. So, there’s hope on the horizon!

Future Trends & Predictions in Transformer-Based Computer Vision

1. Widespread Adoption of Vision Transformers (ViTs)

Vision Transformers, as they’re often called ViTs, have really taken off lately. Why? Well, it’s all about how they handle images. Instead of just picking out bits and pieces, they look at the whole picture, which makes a big difference. This kind of approach is leading to better accuracy, and you know what that means, more industries are starting to use them.

Think about healthcare, self-driving cars, and security. These fields are getting a serious upgrade due to ViTs. Big names like Google, Meta, and Tesla are jumping on the bandwagon, integrating these models into their AI systems. They’re using them to improve things like object recognition, medical diagnostics, and even automated surveillance. It’s pretty exciting to see how this technology is shaping the future!

2. Hybrid AI Models for Greater Efficiency

It looks like the combination of CNNs and transformers is really set to take over the AI scene in the next few years. These hybrid models are pretty cool because they merge the efficiency of CNNs with the adaptability of transformers. It results in lower computational costs without sacrificing performance.

This kind of integration is especially useful for real-time applications, like robotics, autonomous navigation, and intelligent surveillance. In these areas, having fast processing speed is just crucial, So, it’ll be exciting to see how this all unfolds!

3. Self-Supervised Learning & AI Generalization

AI models are really starting to shift away from needing all that labeled data. It’s pretty cool because this makes them a lot more scalable for all sorts of applications. Imagine the possibilities! They can learn directly from raw image inputs, which is likely going to boost their accuracy, especially in fast-changing situations like disaster response or when you’re interacting with personalized AI assistants.

And here’s the thing, as these self-supervised learning techniques keep evolving, we’re going to see transformer-based systems becoming even more flexible and user-friendly.

4. AI-Powered Creativity & Art Generation

Transformers are also shaking things up in the world of AI-generated art, film, and those super realistic virtual experiences. Take tools such as DALL-E and Midjourney, for instance; they’re perfect examples of how these transformers are totally changing the game in content creation.

Actually, the entertainment and gaming sectors are jumping on the bandwagon, using AI to amp up visual effects and storytelling. It’s like they’re tapping into a whole new level of creativity. Honestly, it’s fascinating to see how AI is becoming such a key player in the world of artistic innovation.

5. Ethical AI & Regulatory Advancements

With AI vision technologies booming like they are, it’s no surprise that governments and organizations are really pushing for fairness, accountability, and transparency in how AI makes decisions. They’re talking about stricter rules to keep things in check, especially when it comes to deepfakes, facial recognition, and the ethics of surveillance. Their goal is to make sure these AI advancements stick to responsible development principles. Honestly, creating ethical AI frameworks could help us build a safer and fairer environment, steering clear of biased or harmful uses.

And looking at the future, it seems pretty clear that transformers are going to keep influencing AI-driven vision. They’re set to boost efficiency and adaptability, all while promoting responsible automation. As different industries start weaving these transformer models into their operations, we’re likely to see a major shift in how machines understand and interact with visual data.

Conclusion

Transformers are changing the game in artificial intelligence, linking natural language processing with computer vision in a way that we’ve never seen before. It’s pretty impressive, honestly. They’re not just versatile; they’re performing at levels that are making big waves in critical areas like healthcare and transportation. If you’re a student or a professional, getting a grip on transformers is very important if you want to keep up in this fast-paced world of AI.

What are your thoughts on transformers revolutionizing computer vision? Do you believe they will replace CNNs entirely in AI-driven image processing? Let’s discuss! Share your thoughts in comments.